Navigating Ethical Decisions in Artificial Intelligence

A Crucial Crossroads

The decision about the direction of artificial intelligence development is at a critical juncture. It involves a choice between prioritizing efficiency, aimed at goal achievement, and crafting technology that honors diverse human values. As for who is making this decision, it typically involves a combination of policymakers, industry leaders, researchers, and ethicists. Whether this decision is made consciously varies depending on the awareness and deliberation of those involved.

So, who is making this decision? And, is this decision being made consciously?

The rapid progress of artificial intelligence (AI) has led to amazing advancements, like self-driving cars and language models that can write like humans. However, these technological advances also raise important ethical questions about how we should guide the creation of smarter systems that are expected to lead to artificial general intelligence— AI systems that possess the ability to understand, learn, and apply knowledge across a wide range of tasks, akin to human intelligence.

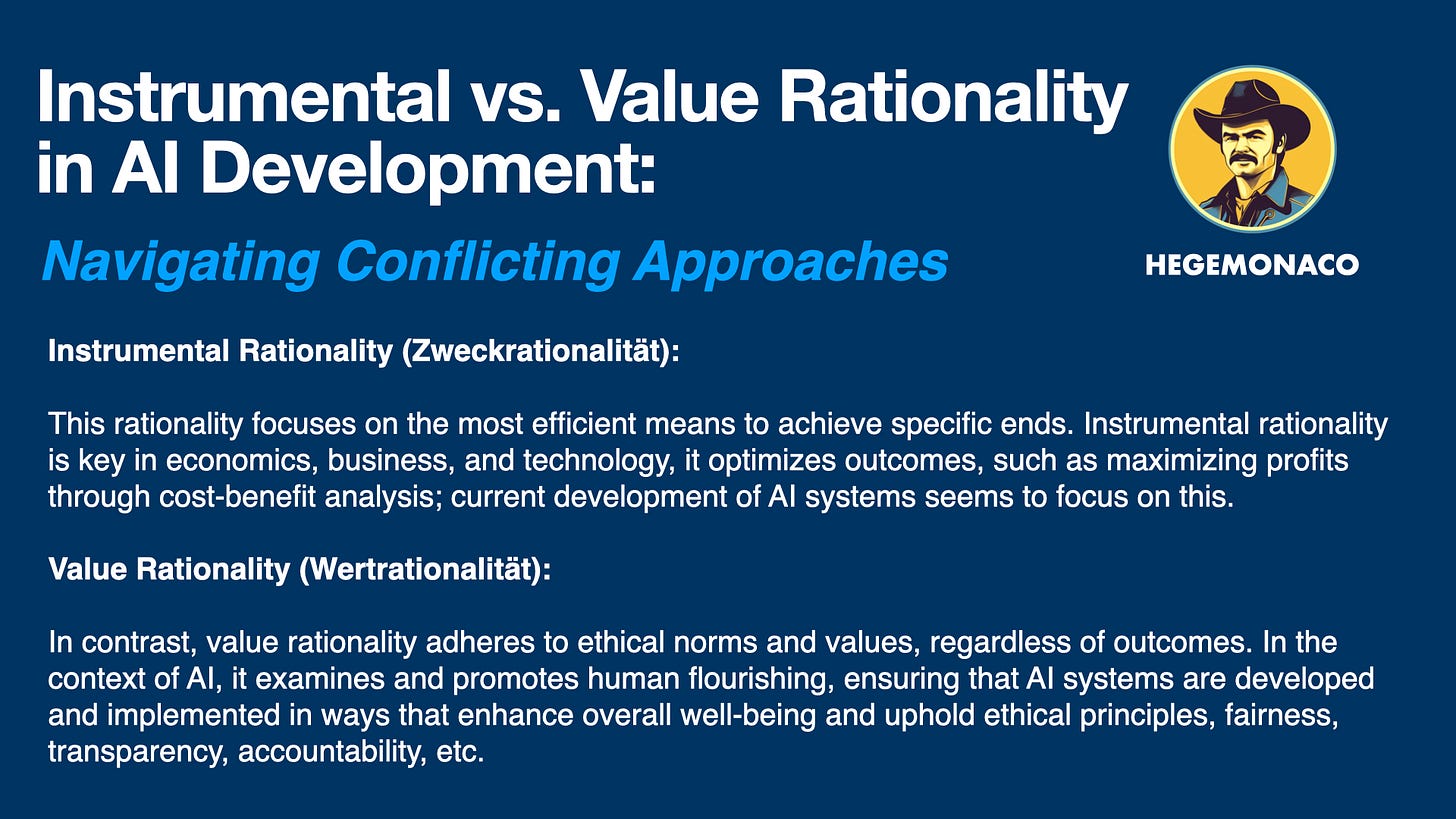

Max Weber, a renowned German sociologist, introduced the concepts of Zweckrationalität (instrumental rationality) and Wertrationalität (value rationality), which shed light on our contemporary quandary.

Zweckrationalität underscores the efficient attainment of particular objectives, typically via cost-benefit and means-ends assessments. When applied to the realm of artificial intelligence (AI) development, this perspective accentuates the improvement of system efficiency and capabilities, sometimes overlooking wider societal implications-- the aim is often toward the efficiencies of profit.

Wertrationalität, on the other hand, prioritizes core values and ethical principles above all else. In AI development, this approach would make ethics a central concern, possibly limiting the pursuit of greater capabilities and profit motive to ensure beneficence and respect for human values.

This moment requires balance, but who has the power to intervene when Silicon Valley is making all of the decisions? Senator Schumer is currently leading an AI Task Force in an attempt to advocate for a collaborative effort to harness AI's potential; this effort is spearheaded by the bipartisan AI working group.

Evan Greer, director at Fight for the Future, a nonprofit digital rights advocacy group, has criticized Senator Schumer’s new AI framework, likening it to a document authored by Sam Altman and Big Tech lobbyists. Greer highlights the framework's emphasis on "innovation" while neglecting substantive issues such as discrimination and civil rights and the prevention of AI-related harms. She expressed dismay at the proposal's allocation of taxpayer funds towards AI research for military, defense, and private sector gain.

While the roadmap aims to foster American innovation, Congress is concurrently focused on addressing the risks associated with AI. This includes a series of AI-related bills sponsored by Sen. Amy Klobuchar, D-Minn., chairwoman of the Senate Rules Committee.

Here, we see the conflict between these two perspectives through the views of AI researchers, ethicists, policymakers, and impacted communities.

As the trajectory of AI development accelerates beyond our moral comprehension, it's imperative to confront the critical risks of bias, privacy infringements, and existential dangers.

We must acknowledge the conscious decisions being made in the present in favor of instrumental rationality over value rationality that promises human advancement, particularly those values concerning the varied facets of human well-being.

Now is the moment to explore avenues for crafting AI systems that seamlessly integrate high performance and technological advancement with a robust ethical framework, ultimately prioritizing human welfare above all else.

However, it's vital to recognize that humans often make decisions hastily, believing they are acting in the "best interest"-- but whose best interest is the priority?

The "Common Good" in the twenty-first century is a complex topic.